Kuchwizzy

JF-Expert Member

- Oct 1, 2019

- 1,193

- 2,599

Wazee wazee

Kwanza nianze kwa kukiri kuwa sina kawaida ya kumalizia nyuzi ninazozianzisha kama ambavyo lecturers wengine wa JF walivyo pia

Sababu kubwa huwa ni mbili, kukosa interest au kutingwa na kazi, na mda mwingine unakosa wale sophisticated audiences unaowatarajia kulingana na topic husika (kwa hii na expect audience wachache anyway)

Ila huu uzi utakua tofauti, nitajitahidi kuu-keep active hata kama utachukua mwaka mzima au wafatiliaji watakua wawili.

Mathematical foundation ya Neural Network sio ngumu sana, kama umesoma Advanced Mathematics (5 & 6) unaweza ku digest 90% ya hizo concept

Kinachofanya Neural Network iwe so powerful sio math or its architecture ni uwezo wake wa generalize kutoka kwenye what it learns from large numbers of independent random examples kulingana LLN

"The law of large numbers (LLN) is a mathematical law that states that the average of the results obtained from a large number of independent random samples converges to the true value, if it exists" - Wikipedia

(Tutaelezea zaidi maana ya hiko nilichotoka kusema kwenye lectures za mwisho mwisho)

Tuanze na neno kutoka kwa motivational speaker wetu, Prof Richard Feyman

Prerequisites: Na assume upo comfortable na Python Programming, na una fundamentals za Pure mathematics haswa topics kama Matrices, Vectors, Logic, Function, Calculus (Differentiation & Partial Derivative), na basic concepts za Linear Algebra. Statistics na Probability

Kwa advanced mathematical concepts ambazo hukusoma advance tutazielezea as we go.

Pia hakikisha una PC, Gmail account, extra I.Q points za ku digest hizi concepts pamoja na great interest /passion na A.I

Link ya lectures hizi hapa chini (kwasasa tupo Lecture 15).

Lecture 1 - Easy For Me, Hard For You

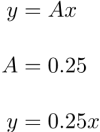

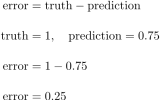

Lecture 2 - A Simple Predicting Machine

Lecture 3 - Classifying is Not Very Different from Predicting

Lecture 4 - Sometimes One Classifier Is Not Enough

Lecture 5 - Neurons, Nature’s Computing Machines

Lecture 6 - Following Signals Through A Neural Network

Lecture 7 - Matrix Multiplication is Useful .. Honest!

Lecture 8 - A Three Layer Example with Matrix Multiplication

Lecture 9 - Learning Weights From More Than One Node

Lecture 10 - Backpropagating Errors with Matrix Multiplication

Lecture 11 - How Do We Actually Update Weights?

Lecture 12 - Gradient Descent Algorithm

Lecture 13 - Mean Squared Error ( Loss ) Function And Mathematics of Gradient Descent Algorithm

Lecture 14 - Derivative of Error with respect to any weight between input and hidden layers

Lecture 15 - How to update weights using Gradient Descent

Kwanza nianze kwa kukiri kuwa sina kawaida ya kumalizia nyuzi ninazozianzisha kama ambavyo lecturers wengine wa JF walivyo pia

Sababu kubwa huwa ni mbili, kukosa interest au kutingwa na kazi, na mda mwingine unakosa wale sophisticated audiences unaowatarajia kulingana na topic husika (kwa hii na expect audience wachache anyway)

Ila huu uzi utakua tofauti, nitajitahidi kuu-keep active hata kama utachukua mwaka mzima au wafatiliaji watakua wawili.

Mathematical foundation ya Neural Network sio ngumu sana, kama umesoma Advanced Mathematics (5 & 6) unaweza ku digest 90% ya hizo concept

Kinachofanya Neural Network iwe so powerful sio math or its architecture ni uwezo wake wa generalize kutoka kwenye what it learns from large numbers of independent random examples kulingana LLN

"The law of large numbers (LLN) is a mathematical law that states that the average of the results obtained from a large number of independent random samples converges to the true value, if it exists" - Wikipedia

(Tutaelezea zaidi maana ya hiko nilichotoka kusema kwenye lectures za mwisho mwisho)

Tuanze na neno kutoka kwa motivational speaker wetu, Prof Richard Feyman

Prerequisites: Na assume upo comfortable na Python Programming, na una fundamentals za Pure mathematics haswa topics kama Matrices, Vectors, Logic, Function, Calculus (Differentiation & Partial Derivative), na basic concepts za Linear Algebra. Statistics na Probability

Kwa advanced mathematical concepts ambazo hukusoma advance tutazielezea as we go.

Pia hakikisha una PC, Gmail account, extra I.Q points za ku digest hizi concepts pamoja na great interest /passion na A.I

Link ya lectures hizi hapa chini (kwasasa tupo Lecture 15).

Lecture 1 - Easy For Me, Hard For You

Lecture 2 - A Simple Predicting Machine

Lecture 3 - Classifying is Not Very Different from Predicting

Lecture 4 - Sometimes One Classifier Is Not Enough

Lecture 5 - Neurons, Nature’s Computing Machines

Lecture 6 - Following Signals Through A Neural Network

Lecture 7 - Matrix Multiplication is Useful .. Honest!

Lecture 8 - A Three Layer Example with Matrix Multiplication

Lecture 9 - Learning Weights From More Than One Node

Lecture 10 - Backpropagating Errors with Matrix Multiplication

Lecture 11 - How Do We Actually Update Weights?

Lecture 12 - Gradient Descent Algorithm

Lecture 13 - Mean Squared Error ( Loss ) Function And Mathematics of Gradient Descent Algorithm

Lecture 14 - Derivative of Error with respect to any weight between input and hidden layers

Lecture 15 - How to update weights using Gradient Descent