Kuchwizzy

JF-Expert Member

- Oct 1, 2019

- 1,193

- 2,599

- Thread starter

- #61

Part 13 - Mean Squared Error ( Loss ) Function And Mathematics of Gradient Descent Algorithm

Tangu mwanzo, tuliona kuwa Error, ni tofauti kati ya network prediction, O na target value from the training dataset, T

Ni equation rahisi sana, inapima tofauti kati ya output na target value na kazi yetu ni kupunguza hio tofauti kadri tunavyoweza, lakini je inatufaa tunapoelekea?

NB: Error function ina majina mengi, kuna Error function, Loss function na Cost function. Pote tunazungumzia kitu kimoja, kuna cases nyingine, tofauti inaweza kuwepo, ila mda mwingi hizo terms ni interchangeably

Hii table hapa chini inaonyesha Error functions za aina tatu ikiwemo hii

Tuna network output, O, tuna target output T, na aina tatu za error functions

Ya kwanza, ni simple difference kati ya output na target tunayoijua, udhaifu wa hii approach ni kwamba kama tukitaka kujua overall performance ya network nzima kwa kujumlisha errors zote

Hii error function ina tabia ya ku cancel baadhi ya errors na kutupa wrong judgement ya network performance

Utaona kwenye hio table, tukichukua jumla ya errors zote tunapata 0, ikiwa na maana kuwa overall network haina errors (ina perform better) licha ya kuwa imetupa incorrect prediction mbili (0.5 badala ya 0.4, na 0.7 badala ya 0.8)

The reason ni kwamba, negative na positive errors zina cancel each other, hata kama hazitoji cancel completely na kuwa 0, utaona kuwa sio njia nzuri ya kupima network error

Aina ya pili ya error function ni kutake absolute values of errors (inachukua only positive values) ku avoid cancellation of errors

Ubaya wa hii approach ni kwamba, Absolute function hazina smooth curve, ni discontinuous pale inapo approach minimum values (eg at

Hii ni graph ya simple absolute function kuona tunachomaanisha:

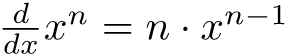

Tuliona kuwa ili function iwe differentiable, inapaswa ku change smoothly as its parameters changes bila kuwepo kwa discontinuities au abrupt change (mabadiliko ya ghafla) ya aina yoyote, ila utaona kuwa x inapo approach 0 (at x = 0), absolute function ina jump ghafla (from decreasing to increasing or viceversa)

Hatuwezi kutumia hii approach kwasababu algorithm ya gradient descent ambayo inategemea differentiability ya function kufanya kazi (its first derivative) haitoweza ku deal na V shaped curve ya aina hii. Vilevile slope yake haipungui as we approach its local minimum, so kuna risk ya ku overshoot na ku bounce hapo hapo milele.

Aina ya tatu ya Error function, ni square error function, means tuna square tu Error function yetu tuliyoizoea

Tayari tunajua kuwa squared error function ni differentiable, zina changes smoothly as its variables change at any point on the graph, so tume solve tatizo letu la errors kuji cancel na bado tuna advantage ya kutumia Gradient descent

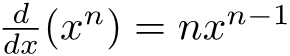

Hii candidate ya error function ina advantage nyingine pia, ni rahisi kutafuta derivative ya squared function, so inakua rahisi hata kwenye computation.

Mathematics of the Gradient Descent

Kama tuna imagine overall Error function kama curve, basi kila weight kwenye neural network ita represent a particular location au point kwenye hio curve

Hii ni 2D (plane) representation ya tulichosema hapo juu

Sasa tunachopaswa kujua, ni slope au gradient ya Error function katika hio location au weight (generally, any location au weight), kwenye huo mchoro hapo juu

(Kihesabu hakuna tofauti kati normal derivative uliyozoe na partial derivate, tofauti ni kwamba in partial derivative tuna differentiate the function with respect to only one variable, while keeping other constant. kwenye hii case yetu tunataka kujua gradient of the error function katika hii particular variable, so hatuna haja ya kujali kuhusu all other weights, we keep them constant

Tunatumia partial derivative tunapo deal na multivariate function kama kwenye hii case)

Hapa tunashauku ya kujua direction of the slope ili tu move hii weight in opposite direction

Sasa tutazame hii diagram hapa, ni simple neural network yenye 3 layers, kila layer ikiwa na 2 neurons

Japokua tuta reference hii diagram, tuta generalize derivation yetu ku fit any neural network of any size.

Tutazame notation kwenye hio diagram:

Kama unavyoona, tumehakikisha tunakua as general as possible ili final equation yetu ifit any neural network of any size

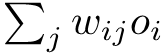

Kwa kuanza, tu focus na weight kati ya node yoyote ya hidden layer iliyounganisha node yoyote ya output layer

Kwenye notation zetu hapo juu, hii particular weight tumeiita

So, imagine tupo juu ya huu mlima wa Error function (kwenye deep neural network, hii very complex higher dimensional space),

Na kumbuka kuwa, tuna focus only on this weight, tuna ignore nyingine zote

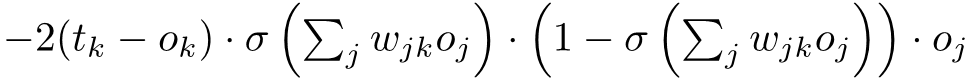

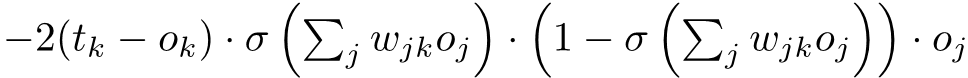

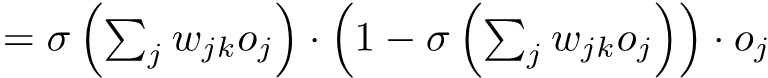

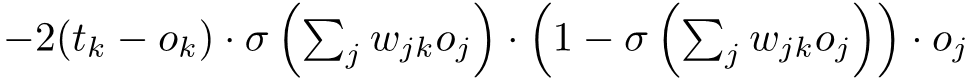

Hivyo kihesabu, tunapaswa tu compute partial derivate ya Error

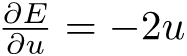

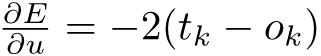

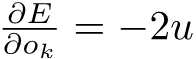

Mathematically, hio gradient ni

But

So,

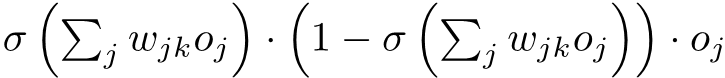

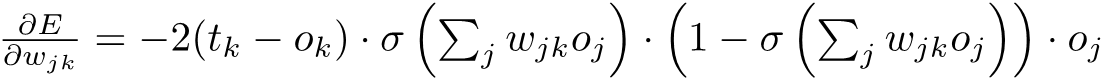

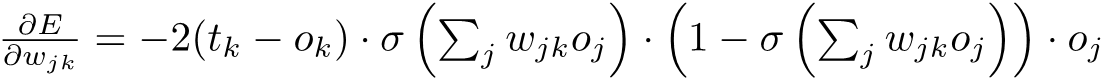

So, tuki expand our expression tunapata hiki:

Sasa, tu expand our sigma notation

Kama tunavyoona, tunapaswa ku take derivative ya kila instance ya squared error with respect to weight

Hii ni gharama kihesabu (computationally expensive) ukizingatia tunaweza kuwa na maelfu hata mabilion ya hizi instances kulingana na idadi ya output neurons / nodes

Lakini tukumbuke kuhusu tulichojifunza wakati wa forward pass, kila weight ina influence output ya neuron ambayo imeunganishwa nayo na haina influence kwenye output ya node ambayo haijaunganishwa nayo

Ndio maana tunapo propagate errors back wakati wa backpropagation kila node ina share its fraction of errors kutoka kwenye output nodes zilizoungana nayo

Inaendelea....