Kuchwizzy

JF-Expert Member

- Oct 1, 2019

- 1,193

- 2,599

- Thread starter

- #21

Part 05 - Neurons, Nature’s Computing Machines

Kompyuta ina uwezo mkubwa wa kupiga mahesabu kwa kasi (computing power), richa ya nguvu hii, kompyuta aina uwezo wa kufanya majukumu magumu kama yanayofanywa na ubongo wa viumbe hai hata kidogo kama njiwa

Uwezo kama wa kutafuta chakula, kujenga kiota au kukimbia hatari, haya yote ni majukumu magumu sana lakini hufanywa kwa urahisi sana na ubongo wa njiwa ambao una computing power ndogo ukilinganisha na kompyuta inayoundwa na mabilioni ya vifaa vya kieletroniki

Baada ya watafiti kuumiza kichwa kwa mda mrefu, ikagundulika kua tofauti ipo katika muundo wa kompyuta na ubongo wa viumbe hai (architectural difference)

Kompyuta inachakata data au kupiga mahesabu hatua kwa hatua (sequentially) na hakuna bahati nasibu kwenye mahesabu ya kompyuta (fuzziness), kila kitu kipo bayana

Wakati ubongo wenyewe unapiga mahesabu mengi kwa wakati mmoja (parallel) na kuna bahati nasibu kwenye mahesabu yake (fuzziness) kuna hatua hazipo bayana, hii tabia inafanya ubongo uweze kutatua tatizo katika situation ambayo jawabu halipo wazi.

Hii tofauti ya utendaji kazi ndio inayofanya ubongo uweze kutatua matatizo magumu zaidi (complex tasks) ukilinganisha na kompyuta.

Tutazame basic computing unit ya ubongo, Neuron

Neuron ni aina ya seli (cell) inayochukua taarifa (signal) kutoka kwenye mwili na kupeleka kwenye ubongo, na kutoa taarifa kwenye ubongo na kurudisha kwenye mwili

Neurons zote, bila kujali fomu zake, husafirisha taarifa za kiumeme (electrical signal) kutoka sehemu moja kwenye nyingine, taarifa huanzia kwenye dendrite, hupita kwenye axon mpaka kwenye terminals na kusafirisha zao la hio taarifa (output) kama input kwenda kwenye neuron inayofatia

Hivi ndio ubongo wako unavyoweza hisi sauti, mwanga, mguso na taarifa nyingine zote, taarifa kutoka sehemu zote na mwili wako husafirishwa na neurons za mfumo wako wa fahamu (nervous system) kwenda kwenye ubongo wako ambao nao pia umeundwa na neurons

Tunahitaji neurons ngapi kufanya majukumu magumu? ubongo wa binadamu umeundwa na neurons takribani bilioni 100, ubongo wa nzi una neurons takribani 100,000 lakini nzi anaweza fanya majukumu magumu zaidi kuliko kompyuta

Neurons 100,000 tunaweza kuzi replicate kwenye kompyuta

Kwahio siri ya ubongo ni nini? licha ya kuundwa na computing components chache zenye speed ndogo ya mahesabu, ubongo unauwezo wa kufanya complex tasks kuliko kompyuta

Siri ipo kwenye utendaji kazi wa neuron, hivyo tutazame jinsi gani neurons zinafanya kazi

Neuron zinapokea taarifa za kiumeme (electrical input) na kutoa taarifa nyingine ya kiumeme (another electrical output)

Kwa mtindo huu, inafanana kabisa na simple predictor au classifier tuliyo deal nayo hapo awali?

Je, tunaweza model neuron kama linear function ( y = Ax + B)?

Wazo zuri, lakini hapana, output ya neuron hai obey linear function

Ushahidi umethibitisha kuwa, neuron hazi react papo kwa papo, bali husubiria mpaka pale taarifa inapokua na nguvu zaidi kisha neuron ndio huzalisha taarifa nyingine kama output

Ni kama kujaza maji kwenye kikombe, maji humwagika tu pale kikombe kinapojaa mpaka juu

Hii tabia ya neuron ku suppress signal mpaka inapovuka kiwango fulani (threshold) ina-make sense, tabia hii inaiwezesha neuron ku ignore tiny au noisy signal (taarifa zisizo na maana yoyote) na kupitisha tu zile taarifa zenye nguvu au za maana.

Tuone kama hesabu inaweza kutusaidia ku model aina hii ya tabia, mathematically

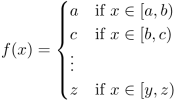

Function inayochukua input naku generate output huku ikizingatia aina fulani ya kiwango, threshold hujulikana kama activation function

Kwenye hesabu, kuna activation functions nyingi za namna hii, step function rahisi ina tabia kama hii

Mfano:

Kwa values ndogo za input, x (ndogo kuliko 2) output ni low (0), lakini output ita jump kwa high values of x (kubwa kuliko au sawa na 2)

Ku model hii tabia ya neuron kwa usahihi zaidi tunaweza tumia aina nyingine ya activation function ukiacha step function

Sababu ya kuachana na step function ni kwamba, kuna sudden jump ya output pale threshold inapofikiwa, lakini kwenye neuron kuna gradual or smooth jump kuelekea threshold inapofikiwa

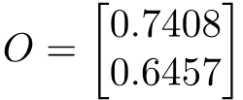

Tunaweza chagua aina nyingine ya activation function, inayojulikana kama sigmoid function au Logistic function inayotengeneza S-shaped curve

e ni mathematical constant inayojulikana kama Euler number yenye thamani ya 2.71828 (kwa kukadiria kwa sababu haina mwisho, ni transcendental number)

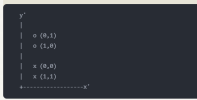

Hili ni umbo la grafu ya sigmoid function

Sababu nyingine ya kuchagua Sigmoid function kama activation function ni kwasababu ni rahisi kufanya nayo mahesabu, pia output ya sigmoid function ina range between 0 to 1 hivyo ni rahisi ku model probability / classification pia

Biological neuron inaweza chukua inputs zaidi ya moja, sio moja tu,, tumeona hili pia wakati tuna deal na Boolean functions

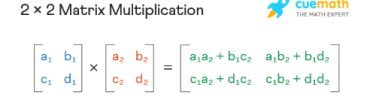

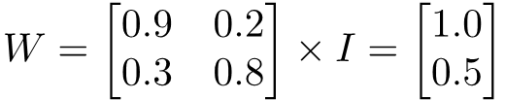

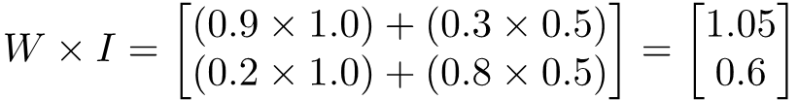

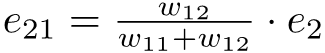

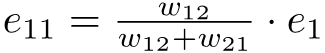

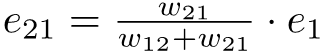

Kwahio tuna deal vipi na inputs zaidi ya moja? Tunazijumlisha tu zote kisha tuna apply sigmoid function kwenye resultant sum

Kama combined signals hazitoa kua na nguvu ( kama resultant sum haitokua higher than threshold) sigmoid function itazi suppress (output itakua 0) kama biological neuron inavyofanya. na kama combined signals zina nguvu, neuron ita react (fire), hii tabia inatupa sense of fuzziness tunayoitaka kwenye mahesabu yetu na tumeweza ku introduce non linearity kwasababu input ya activation function hai directly proportion to its output (hence, non-linear)

NB: Hapa unapaswa kutambua kua kwenye A.I tuna deal na abstract concepts za hesabu, artificial neuron au neural network ni equations tu za hesabu sio physical things ndani ya kompyuta, hapa neuron ni simple sigmoid function inayopokea inputs kutoka kwenye neurons nyingine na kutuma outputs kama inputs kwenda kwenye neurons nyingine ambazo pia ni sigmoid function au activation function yoyote utakayoamua kutumia.

Ni useful and powerful mathematical abstractions

Kila neuron, imeungana na neuron nyingine na kwa pamoja zinaunda mtandao wa neurons ama neural networks

(hence the name)

Utaona hapo kua, kila neuron inachukua inputs kutoka kwenye neurons nyingi zilizopita na kutuma outputs, kama inputs kwenda kwa neurons nyingi zinazofatia

Ku model hii tabia, tunapaswa kuwa na network ya neurons ambapo kila neuron imeungana na neurons nyingine

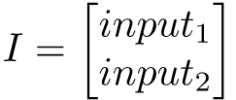

Unaweza kujiuliza, ni sehemu gani ya mtandao wa neva (neural network) unajifunza? ipi ni parameter au variable tunayoweza kui adjust ili kupata correct outputs kama ilivyokua kwa simple predictor au classifier?

Jibu rahisi ni mahusiano au connection kati ya neuron moja na nyingine

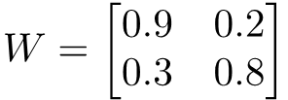

Ukubwa wa mahusiano kati ya neuron na neuron hujulikana kama weight

Tunachofanya kwenye training ni ku adjust strength of connections au weights baina ya neurons

Jina lingine la neuron ni node

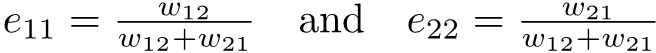

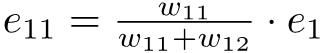

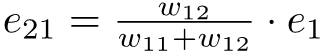

Tugusie kuhusu notation kidogo, w 2,3 ni weight (strength of connection) kati ya neuron au node ya pili kwenye tabaka (layer) ya kwanza na neuron au node ya tatu kwenye layer inayofata (ya kati)

Unaweza kujiuliza kwanini tumeamua ku connect hizi neurons kwenye mtindo huu wakati kuna mitindo mingi ya ku connect hizi neurons

Jibu ni kwamba mtindo huu ni simple encode kama computer instructions au code, na wakati wa training

Network yenyewe inachagua ni kwa namna gani i connect neurons kwa ku emphasize baadhi ya connections au weights na ku de-emphasize nyingine

Tunaweza kutumia mda huu ku formalize yote tuliyojifunza mpaka sasa

Biological Neurons and Their Efficiency

Biological neurons can perform complex tasks with relatively slow speeds and few resources compared to computers due to several factors:- Massive Parallelism: Biological neurons operate in a highly parallel manner. The human brain has approximately 86 billion neurons, each connected to thousands of other neurons, allowing for simultaneous processing of vast amounts of information.

- Adaptability and Plasticity: Biological neurons exhibit plasticity, meaning they can change their strength and structure in response to learning and experience. This adaptability allows the brain to optimize its processing capabilities efficiently.

Definitions

Neuron

A neuron is a nerve cell that is the fundamental building block of the nervous system. Neurons receive, process, and transmit information through electrical and chemical signals. In the context of artificial neural networks, a neuron is a computational unit that receives inputs, processes them through a function, and produces an output.Activation Function

An activation function in a neural network is a mathematical function applied to the input signal of a neuron to produce an output signal. It introduces non-linearity into the network, enabling it to learn and model complex patterns. Common activation functions include the sigmoid function, ReLU (Rectified Linear Unit), and tanh (hyperbolic tangent).Sigmoid Function

The sigmoid function is a type of activation function defined by the equation:It maps input values to an output range between 0 and 1, making it useful for binary classification tasks. The sigmoid function has a smooth gradient, which helps in gradient-based optimization.

Weight

In a neural network, a weight is a parameter that adjusts the input signal to a neuron. Weights are learned during the training process and determine the strength and direction of the input signal's influence on the neuron's output. Mathematically, weights are multiplied by input values and summed up before applying the activation function.NB: Imagine each connection as a "wire" carrying a signal, and the weight determines the strength of that signal.

Node

A node in a neural network, also known as a neuron or unit, is a basic processing element that receives inputs, applies a weight to each input, sums them up, and passes the result through an activation function to produce an output.Example

Here's a visual example of a simple neural network with definitions:- Neuron (Node): Each circle in the network diagram represents a neuron.

- Weight (w): Each arrow connecting the neurons has an associated weight.

- Activation Function: The function applied at each neuron to produce an output. For instance, a sigmoid function can be used.

- Sigmoid Function:

Next : Following Signals Through A Neural Network